Running the Set-PlannerUserPolicy Cmdlet Has an Unexpected Effect

Although Planner supports a Graph API, the API focuses on management of plans, tasks, buckets, categories, and other objects used in the application rather than plan settings like notifications or backgrounds. It’s good at reporting plans and tasks or populating tasks in a plan, but the API also doesn’t include any support for tenant-wide application settings. In most cases, these gaps don’t matter. The Planner UI has the necessary elements to deal with notification and background settings, neither of which are likely changed all that often. But tenant-wide settings are a dirty secret of Planner. Let me explain why.

The Planner Tenant Admin PowerShell Module

In 2018, Microsoft produced the Planner Tenant Admin PowerShell module. With such a name, you’d expect this module to manage important settings for Planner. That is, until you read the instructions about how to use the module, which document the odd method chosen by the Planner development group distribute and install the software.

Even the Microsoft Commerce team, who probably have the reputation for the worst PowerShell module in Microsoft 365, manage to publish their module through the PowerShell Gallery. But Planner forces tenant administrators to download a ZIP file, “unblock” two files, and manually load the module. The experience is enough to turn off many administrators from interacting with Planner PowerShell.

But buried in this unusual module is the ability to block users from being able to delete tasks created by other people. Remember that most plans are associated with Microsoft 365 Groups. The membership model for groups allows members to have the same level of access to group resources, including tasks in a plan. Anyone can delete tasks in a plan, and that’s not good when Planner doesn’t support a recycle bin or another recovery mechanism.

What the Set-PlannerUserPolicy Cmdlet Does

The Set-PlannerUserPolicy cmdlet from the Planner Tenant Admin PowerShell module allows tenant administrators to block users from deleting tasks created by other people. It’s the type of function that you’d imagine should be in plan settings where a block might apply to plan members. Or it might be a setting associated with a sensitivity label that applied to all plans in groups assigned the label. Alternatively, a setting in the Microsoft 365 admin center could impose a tenant-wide block.

In any case, none of those implementations are available. Instead, tenant administrators must run the Set-PlannerUserPolicy cmdlet to block individual users with a command like:

Set-PlannerUserPolicy -UserAadIdOrPrincipalName Kim.Akers@office365itpros.com -BlockDeleteTasksNotCreatedBySelf $True

The Downside of the Set-PlannerUserPolicy Cmdlet

The point of this story is that assigning the policy to a user account also blocks the ability of the account to delete plans, even if the account is a group owner. This important fact is not mentioned in any Microsoft documentation.

I discovered the problem when investigating how to delete a plan using PowerShell. It seemed a simple process. The Remove-MgPlannerPlan cmdlet from the Microsoft Graph PowerShell SDK requires the planner identifier and its “etag” to delete a plan. This example deletes the second plan in a set returned by the Get-MgPlannerPlan cmdlet:

[array]$Plans = Get-MgPlannerPlan -GroupId $GroupId $Plan = $Plans[1] $Tag = $Plan.additionalProperties.'@odata.etag' Remove-MgPlannerPlan -PlannerPlanId $Plan.Id -IfMatch $Tag

The same problem occurred when running the equivalent Graph API request:

$Headers = @{}

$Headers.Add("If-Match", $plan.additionalproperties['@odata.etag'])

$Uri = ("https://graph.microsoft.com/v1.0/planner/plans/{0}" -f $Plan.Id)

Invoke-MgGraphRequest -uri $Uri -Method Delete -Headers $Headers

In both cases, the error was 403 forbidden with explanatory text like:

{"error":{"code":"","message":"You do not have the required permissions to access this item, or the item may not exist.","innerError":{"date":"2024-06-13T17:10:10","request-id":"d5bf922c-ea9b-48c6-9629-d9749ab7ec51","client-request-id":"6a533cf8-4396-4743-acf1-a40c32dd11bc"}}}

Even more bafflingly, the Planner browser client refused to let me delete a plan too. At least, the client accepted the request but then failed with a very odd error (Figure 1). After dismissing the error, my access to the undeleted plan continued without an issue.

A Mystery Solved

Fortunately, I have some contacts inside Microsoft that were able to check why my attempts to delete plans failed and report back that the deletion policy set on my account blocked the removal of both tasks created by other users and plans. The first block was expected, the second was not. I’m glad that the mystery is solved but underimpressed that Microsoft does not document this behavior. They might now…

The moral of the story is not to run PowerShell cmdlets unless you know what their effect would be. I wish someone told me that a long time ago.

]]>Longstanding Service Issue Retrieving SharePoint Usage Data

The Microsoft 365 ecosystem is so large that it’s hard to keep track of everything that changes that show up in different workloads. We’ve always known about the difficulties of tracking new features, deprecations, and other issues, but sometimes it takes a user to report something to focus on a specific problem.

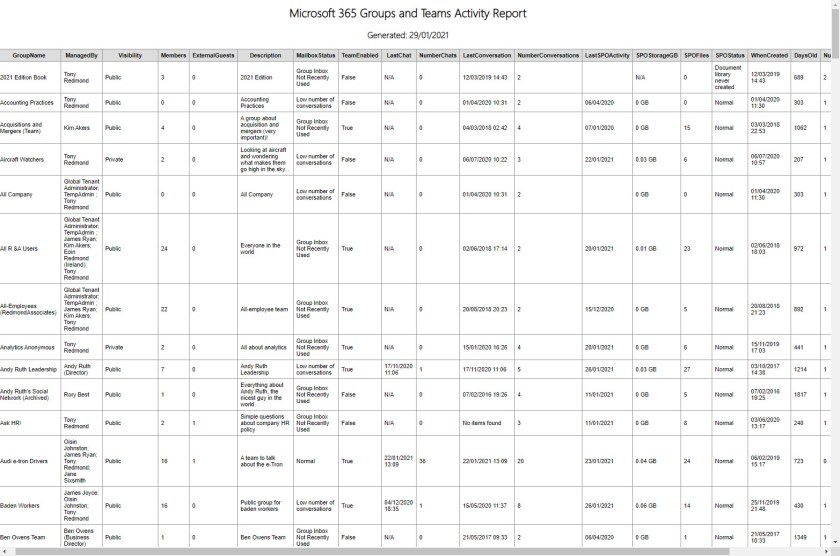

An example is when a reader noted that the Graph-based script to report the storage quota used by SharePoint sites no longer included site URLs in the output (Figure 1). The original script (from 2020) used a registered Entra ID app to authenticate and use the Graph getSharePointSiteUsageDetail API to fetch site detail data.

Problems in the Graph APIs Accessing SharePoint Usage Data

When I investigated the problem, I decided to update the script code to use the Microsoft Graph PowerShell SDK instead. The update did nothing to retrieve the missing data. This isn’t surprising because the problem lies in the Graph API rather than the way the API is called.

The Microsoft 365 admin center uses the same Graph API for its SharePoint site usage report and the same problem of no site URL data is seen there (Figure 2).

Even worse, the SharePoint site activity report in the Microsoft 365 admin center displays no data (Figure 3).

This problem is because the getSharePointActivityUserDetail API returns no data whatsoever. Here’s an example of using the API in PowerShell in an attempt to retrieve SharePoint Online user activity for the last 180 days. The retrieved data should end up in the SPOUserDetail.CSV file.

$Uri = "https://graph.microsoft.com/v1.0/reports/getSharePointActivityUserDetail(period='D180')" Invoke-MgGraphRequest -Uri $Uri -Method GET -OutputFilePath SPOUserDatail.CSV

However, the output file is perfectly empty apart from the column headers (Figure 4).

The same approach works perfectly with other usage data. For instance, this query works nicely to fetch Exchange Online usage data:

$Uri = "https://graph.microsoft.com/v1.0/reports/getEmailActivityUserDetail(period='D180')" Invoke-MgGraphRequest -Uri $Uri -Method GET -OutputFilePath $EmailUsage.CSV

A Known Service Issue with SharePoint Usage Data

It’s not surprising that an API should have a problem. The APIs haven’t changed recently, so the root cause is more likely due to a change in the SharePoint Online back end. This feeling is reinforced by service health report SP676147 filed on 21 September 2023 (last updated 9 February 2024) that blithely says that “SharePoint and OneDrive URLs may not be displayed in some usage reports.”

Microsoft goes on to note that:

“We’re continuing our work through the validation of multiple potential mitigation strategies to display the URLs of the affected site usage reports. Due to the complexity of the scenarios involved we anticipate this may take additional time.”

The next update for the service health announcement is due on 1 March 2024. What I’m struggling with is that the usage reports included site URLs without any difficulty for years. Why it should suddenly become an issue is inexplicable. And taking over six months to find a solution is even more so.

Microsoft suggests that developers use the Graph Sites API to retrieve the site URL. For example:

$Uri = ("https://graph.microsoft.com/v1.0/sites/{0}" -f $Site.'Site Id')

$SiteData = Invoke-MgGraphRequest -Uri $Uri -Method GET

This works, but only when using an application permission. Using delegated permissions restricts access to sites that the signed-in user is a member of.

SharePoint PowerShell Still Works

Fortunately, it’s possible to get the site storage quota information using the SharePoint Online management PowerShell module. The Graph APIs read from a usage data warehouse that’s populated using background processes. The data is always at least two days old, but it’s much faster to access than using PowerShell to check the storage for each site. But needs must, and at least the old method still works.

I admit forgetting about the service health announcement, perhaps because it’s been ongoing for so long. I’m genuinely surprised that Microsoft is still working on something that seems so innocuous. And I’m even more surprised that customers aren’t making more of a fuss because the URL is the fundamental way to identify a SharePoint site.

Learn how to exploit the data available to Microsoft 365 tenant administrators through the Office 365 for IT Pros eBook. We love figuring out how things work.

]]>Use Graph SDK Cmdlets to Apply Annual Updates to Corporate Branding for Entra ID Sign-in Screen

Back in 2020, I took the first opportunity to apply corporate branding to a Microsoft 365 tenant and added custom images to the Entra ID web sign-in process. Things have moved on and company branding has its own section in the Entra ID admin center with accompanying documentation. Figure 1 shows some custom branding elements (background screen, banner logo, and sign-in page text) in action.

Entra ID displays the custom elements after the initial generic sign-in screen when a user enters their user principal name (UPN). The UPN allows Entra ID to identify which tenant the account comes from and if any custom branding should be displayed.

Company branding is available to any tenant with Entra ID P1 or P2 licenses. The documentation mentions that Office 365 licenses are needed to customize branding for the Office apps. This mention is very non-specific. I assume it means Office 365 E3 and above enterprise tenants can customize branding to appear in the web Office apps. Certainly, no branding I have attempted has ever affected the desktop Office apps.

Scripting the Annual Branding Refresh

Every year, I like to refresh the custom branding elements, if only to update the sign-in text to display the correct year. It’s certainly easy to make the changes through the Entra ID admin center (Figure 2), but I like to do it with PowerShell because I can schedule an Azure Automation job to run at midnight on January 1 and have the site customized for the year.

The Graph APIs include the organizational branding resource type to hold details of a tenant’s branding (either default or custom). Updating the properties of the organizational branding resource type requires the Organization.Rewrite.All permission. Properties are divided into string types (like the sign-in text) and stream types (like the background image).

The script/runbook executes the following steps:

- Connects to the Graph using a managed identity.

- Retrieves details of the current sign-in text using the Get-MgOrganizationBranding cmdlet.

- Checks if the sign-in text has the current year. If not, update the sign-in text and run the Update-MgOrganizationBranding cmdlet to refresh the setting. The maximum size of the sign-in text is 1024 characters. The new sign-in text should be displayed within 15 minutes.

- Checks if a new background image is available. The code below uses a location on a local disk to allow the script to run interactively. To allow the Azure Automation runbook to find the image, it must be stored in a network location like a web server. The background image should be sized 1920 x 1080 pixels and must be less than 300 KB. Entra ID refuses to upload larger files.

- If a new image is available, update the branding configuration by running the Invoke-MgGraphRequest cmdlet. I’d like to use the Set-MgOrganizationBrandingLocalizationBackgroundImage cmdlet from the SDK, but it has many woes (issue #2541), not least the lack of a content type parameter to indicate the type of image being passed. A new background image takes longer to distribute across Microsoft’s network but should be available within an hour of the update.

Connect-MgGraph -Scopes Organization.ReadWrite.All -NoWelcome

# If running in Azure Automation, use Connect-MgGraph -Scopes Organization.ReadWrite.All -NoWelcome -Identity

$TenantId = (Get-MgOrganization).Id

# Get current sign-in text

[string]$SignInText = (Get-MgOrganizationBranding -OrganizationId $TenantId -ErrorAction SilentlyContinue).SignInPageText

If ($SignInText.Length -eq 0) {

Write-Host "No branding information found - exiting" ; break

}

[string]$CurrentYear = Get-Date -format yyyy

$DefaultYearImage = "c:\temp\DefaultYearImage.jpg"

$YearPresent = $SignInText.IndexOf($CurrentYear)

If ($YearPresent -gt 0) {

Write-Output ("Year found in sign in text is {0}. No update necessary" -f $CurrentYear)

} Else {

Write-Output ("Updating copyright date for tenant to {0}" -f $CurrentYear )

$YearPosition = $SignInText.IndexOf('202')

$NewSIT = $SignInText.SubString(0, ($YearPosition)) + $CurrentYear

# Create hash table for updated parameters

$BrandingParams = @{}

$BrandingParams.Add("signInPageText",$NewSIT)

Update-MgOrganizationBranding -OrganizationId $TenantId -BodyParameter $BrandingParams

If (Test-Path $DefaultYearImage) {

Write-Output "Updating background image..."

$Uri = ("https://graph.microsoft.com/v1.0/organization/{0}/branding/localizations/0/backgroundImage" -f $TenantId)

Invoke-MgGraphRequest -Method PUT -Uri $Uri -InputFilePath $DefaultYearImage -ContentType "image/jpg"

} Else {

Write-Output "No new background image available to update"

}

}

The script is available in GitHub.

Figure 2 shows the updated sign-in screen (I deliberately updated the year to 2025).

If you run the code in Azure Automation, the account must have the Microsoft.Graph.Authentication and Microsoft.Graph.Identity.DirectoryManagement modules loaded as resources in the automation account to use the cmdlets in the script.

Full Corporate Branding Possible

The documentation describes a bunch of other settings that can be tweaked to apply full custom branding to a tenant. Generally, I prefer to keep customization light to reduce ongoing maintenance, but I know that many organizations are strongly attached to corporate logos, colors, and so on.

Corporate Branding for Entra ID Isn’t Difficult

Applying customizations to the Entra ID sign-in screens is not complicated. Assuming you have some appropriate images to use, updating takes just a few minutes with the Entra ID admin center. I only resorted to PowerShell to process the annual update, but you could adopt it to have different sign-in screens for various holidays, company celebrations, and so on.

Learn about using Entra ID and the rest of the Microsoft 365 ecosystem by subscribing to the Office 365 for IT Pros eBook. Use our experience to understand what’s important and how best to protect your tenant.

]]>Keeping an Eye on App Permissions

The recent Midnight Blizzard attack on Microsoft corporate email accounts emphasized the world of threat that exists today. If attackers can compromise the world’s biggest software company, repeat with thousands of skilled security professionals, what hope have the rest of us? Even if attackers might consider your tenant to be unworthy of their attention, the answer lies in paying attention to what happens in the tenant.

In the past, I’ve written about checking consent grants to apps for high-priority permissions. The script used in that article posts the results to a team channel in the hope that administrators read and respond to anything untoward. Recent events make it useful to discuss how to retrieve the set of permissions held by apps.

Hash Tables for Fast Permission Lookup

To find consents for high-priority permissions (like Mail.Send or Exchange.ManageAsApp), the script builds a set of hash tables to hold the role identifiers and their display names. For instance, this code builds a hash table of all Microsoft Graph roles (also called permissions or scopes):

$GraphApp = Get-MgServicePrincipal -Filter "AppId eq '00000003-0000-0000-c000-000000000000'"

$GraphRoles = @{}

ForEach ($Role in $GraphApp.AppRoles) { $GraphRoles.Add([string]$Role.Id, [string]$Role.Value) }

$GraphRoles

Name Value

---- -----

b0c13be0-8e20-4bc5-8c55-963c2… TeamsAppInstallation.ReadWriteAndConsentForTeam.All

926a6798-b100-4a20-a22f-a4918… ThreatSubmissionPolicy.ReadWrite.All

c2667967-7050-4e7e-b059-4cbbb… CustomAuthenticationExtension.ReadWrite.All

(etc.)

The script uses separate hash tables for Graph API permissions, Teams permissions. Entra ID permissions, Exchange Online permissions, and so on.

Having hash tables containing role identifiers and names makes it very easy to resolve the roles assigned to registered apps or the service principals used for enterprise apps. As you’ll recall, an enterprise app is an app created by Microsoft or an ISV that is preconfigured to authenticate against Entra ID and is capable of being used in any tenant. When an enterprise app is installed in a tenant, its service principal becomes the tenant-specific instantiation of the app and holds the permissions assigned to the app.

Finding App Permissions from an App’s Service Principal

To find the permissions assigned to an app, we find the service principal identifier for the app and use it to fetch the set of role assignments. Each role assignment allows the use of a Graph API permission or a administrative role (like Exchange.ManageAsApp) allowing the app to behave as if it was an administrator account holding the role:

$App = Get-MgApplication | Where DisplayName -match 'My registered app' $SP = Get-MgServicePrincipal -All | Where-Object AppId -match $App.Appid [array]$AppRoleAssignments = Get-MgServicePrincipalAppRoleAssignment -All -ServicePrincipalId $SP.id

A role assignment looks like this:

AppRoleId : 5b567255-7703-4780-807c-7be8301ae99b

CreatedDateTime : 05/04/2023 16:55:55

DeletedDateTime :

Id : HkiavhziPkSBG_2Lh0ibAx1voO6aUiJCm3NQwDYWeu8

PrincipalDisplayName : My registered app

PrincipalId : be9a481e-e21c-443e-811b-fd8b87489b03

PrincipalType : ServicePrincipal

ResourceDisplayName : Microsoft Graph

ResourceId : 14a3c489-ed6c-4005-96d1-be9c5770f7a3

AdditionalProperties : {}

We’re interested in the resource identifier (ResourceId) and AppRoleId properties. Together, these tell us the resource (like the Microsoft Graph or SharePoint Online) and role that the permission relates to. Because an app can hold many permissions, we loop through the array to retrieve each permission:

ForEach ($AppRoleAssignment in $AppRoleAssignments) {

$Permission = (Get-MgServicePrincipal -ServicePrincipalId `

$AppRoleAssignment.resourceId).appRoles | `

Where-Object id -match $AppRoleAssignment.AppRoleId | Select-Object -ExpandProperty Value

Write-Host ("The app {0} has the permission {1}" -f `

$AppRoleAssignment.PrincipalDisplayName, $Permission)

}

The advantage of the hash tables is that the script doesn’t need to keep running the Get-MgServicePrincipal cmdlet to fetch the set of app roles owned by a resource. Thus, we can simply say:

$Permission = $GraphRoles[$AppRoleAssignment.AppRoleId]

Other Methods to Report App Permissions

As is often the case with PowerShell, other methods exist to resolve the names of app permissions from role identifiers. To explore the possibilities, I suggest you look at Sean Avinue’s article about how to generate a risk report or Vasil Michev’s application service principal inventory script.

One of the great things about PowerShell is the ease of repurposing code written by other people to expand and enhance functionality. In this context, “ease” means that it is easier to reuse code than write it from scratch. Some effort is often necessary to shoehorn the code into your scripts.

Vasil’s inventory script handles both delegate and application permissions. As an example of what I mean about code reuse, I took some of his code and fitted it into my script to generate a report about expiring app credentials (secrets and certificates). Figure 1 shows the output email with the high-priority permissions asterisked. Perhaps having highly-permissioned apps brought to the attention of administrators on a regular basis will force them to review what’s happening with apps.

You can download the updated script from GitHub. Happy coding!

Learn more about how the Microsoft 365 applications and the Graph really work on an ongoing basis by subscribing to the Office 365 for IT Pros eBook. Our monthly updates keep subscribers informed about what’s important across the Office 365 ecosystem.

]]>Exploit Graph Usage Data Instead of PowerShell Cmdlets

The first report generated by Exchange administrators as they learn PowerShell is often a list of mailboxes. The second is usually a list of mailboxes and their sizes. A modern version of the code used to generate such a report is shown below.

Get-ExoMailbox -RecipientTypeDetails UserMailbox -ResultSize Unlimited | Sort-Object DisplayName | Get-ExoMailboxStatistics | Format-Table DisplayName, ItemCount, TotalItemSize -AutoSize

I call the code “modern” because it used the REST-based cmdlets introduced in 2019. Many examples persist across the internet that use the older Get-Mailbox and Get-MailboxStatistics cmdlets.

Instead of piping the results of Get-ExoMailbox to Get-ExoMailboxStatistics, a variation creates an array of mailboxes and loops through the array to generate statistics for each mailbox.

[array]$Mbx = Get-ExoMailbox -RecipientTypeDetails UserMailbox -ResultSize Unlimited

Write-Host ("Processing {0} mailboxes..." -f $Mbx.count)

$OutputReport = [System.Collections.Generic.List[Object]]::new()

ForEach ($M in $Mbx) {

$MbxStats = Get-ExoMailboxStatistics -Identity $M.ExternalDirectoryObjectId -Properties LastUserActionTime

$DaysSinceActivity = (New-TimeSpan $MbxStats.LastUserActionTime).Days

$ReportLine = [PSCustomObject]@{

UPN = $M.UserPrincipalName

Name = $M.DisplayName

Items = $MbxStats.ItemCount

Size = $MbxStats.TotalItemSize.Value.toString().Split("(")[0]

LastActivity = $MbxStats.LastUserActionTime

DaysSinceActivity = $DaysSinceActivity

}

$OutputReport.Add($ReportLine)

}

$OutputReport | Format-Table Name, UPN, Items, Size, LastActivity

In both cases, the Get-ExoMailboxStatistics cmdlet fetches information about the number of items in a mailbox, their size, and the last recorded user interaction. There’s nothing wrong with this approach. It works (as it has since 2007) and generates the requested information. The only downside is that it’s slow to run Get-ExoMailboxStatistics for each mailbox. You won’t notice the problem in small tenants where a script only needs to process a couple of hundred mailboxes, but the performance penalty mounts as the number of mailboxes increases.

Graph Usage Data and Microsoft 365 Admin Center Reports

Microsoft 365 administrators are probably familiar with the Reports section of the Microsoft 365 admin center. A set of usage reports are available to help organizations understand how active their users are in different workloads, including email (Figure 1).

The basis of the usage reports is the Graph Reports API, including the email activity reports and mailbox usage reports through Graph API requests and Microsoft Graph PowerShell SDK cmdlets. Here are examples of fetching email activity and mailbox usage data with the SDK cmdlets. The specified period is 180 days, which is the maximum:

Get-MgReportEmailActivityUserDetail -Period 'D180' -Outfile EmailActivity.CSV [array]$EmailActivityData = Import-CSV EmailActivity.CSV Get-MgReportMailboxUsageDetail -Period 'D180' -Outfile MailboxUsage.CSV [array]$MailboxUsage = Import-CSV MailboxUsage.CSV

I cover how to use Graph API requests in the Microsoft 365 user activity report. This is a script that builds up a composite picture of user activity across different workloads, including Exchange Online, SharePoint Online, OneDrive for Business, and Teams. One difference between the Graph API requests and the SDK cmdlets is that the cmdlets download data to a CSV file that must then be imported into an array before it can be used. The raw API requests can fetch data and populate an array in a single call. It’s just another of the little foibles of the Graph SDK.

The combination of email activity and mailbox usage allows us to replace calls to Get-ExoMailboxStatistics (or Get-MailboxStatistics, if you insist on using the older cmdlet). The basic idea is that the script fetches the usage data (as above) and references the arrays that hold the data to fetch the information about item count, mailbox size, etc.

You can download a full script demonstrating how to use the Graph usage data for mailbox statistics from GitHub.

User Data Obfuscation

To preserve user privacy, organizations can choose to obfuscate the data returned by the Graph and replace user-identifiable data with MD5 hashes. We obviously need non-obfuscated user data, so the script checks if the privacy setting is in force. If this is true, the script switches the setting to allow the retrieval of user data for the report.

$ObfuscatedReset = $False

If ((Get-MgBetaAdminReportSetting).DisplayConcealedNames -eq $True) {

$Parameters = @{ displayConcealedNames = $False }

Update-MgBetaAdminReportSetting -BodyParameter $Parameters

$ObfuscatedReset = $True

}

At the end of the script, the setting is switched back to privacy mode.

Faster but Slightly Outdated

My tests (based on the Measure-Command cmdlet) indicate that it’s much faster to retrieve and use the email usage data instead of running Get-ExoMailboxStatistics. At times, it was four times faster to process a set of mailboxes. Your mileage might vary, but I suspect that replacing cmdlets that need to interact with mailboxes with lookups against arrays will always be faster. Unfortunately the technique is not available for Exchange Server because the Graph doesn’t store usage data for on-premises servers.

One downside is that the Graph usage data is always at least two days behind the current time. However, I don’t think that this will make much practical difference because it’s unlikely that there will be much variation in mailbox size over a couple of days.

The point is that old techniques developed to answer questions in the earliest days of PowerShell might not necessarily still be the best way to do something. New sources of information and different ways of accessing and using that data might deliver a better and faster outcome. Always stay curious!

Insight like this doesn’t come easily. You’ve got to know the technology and understand how to look behind the scenes. Benefit from the knowledge and experience of the Office 365 for IT Pros team by subscribing to the best eBook covering Office 365 and the wider Microsoft 365 ecosystem.

]]>Keep Group Display Names Short to Avoid Problems

A recent discussion revealed that Graph API requests against the Groups endpoint for groups with display names longer than 120 characters generate an error. As you might know, The Groups Graph API supports group display names up to a maximum of 256 characters, so an error occurring after 120 seems bizarre. Then again, having extraordinarily long group names is also bizarre (Figure 1).

Group display names longer than 30 or so characters make it difficult for clients to list groups or teams, which is why Microsoft’s recommendation for Teams clients says that between 30 and 36 characters is a good limit for a team name. Using very long group names also creates formatting and layout issues when generating output like the Teams and Groups activity report, especially if only one or two groups have very long names.

Replicating the Problem

In any case, here’s an example. After creating a group with a very long name, I populated a variable with the group’s display name (146 characters). I then created a URI to request the Groups endpoint to return any Microsoft 365 group that has the display name. Finally, I executed the Invoke-MgGraphRequest cmdlet to issue the request, which promptly failed with a “400 bad request” error:

$Name = "O365Grp-Team with an extraordinary long name that makes it much more than 120 characters so that we can have some fun with it with Graph requests"

$Uri = "https://graph.microsoft.com/v1.0/groups?`$filter= displayName eq '${name}'"

$Data = Invoke-MgGraphRequest -Uri $Uri -Method Get

Invoke-MgGraphRequest: GET Invoke-MgGraphRequest: GET https://graph.microsoft.com/v1.0/groups?$filter=%20displayName%20eq%20'O365Grp-Team%20with%20an%20extraordinary%20long%20name%20that%20makes%20it%20much%20more%20than%20120%20characters%20so%20that%20we%20can%20have%20some%20fun%20with%20it%20%20with%20Graph%20requests'

HTTP/1.1 400 Bad Request

Cache-Control: no-cache

The Get-MgGroup cmdlet also fails. This isn’t at all surprising because the Graph SDK cmdlets run the underlying Graph API requests, so if those requests fail, the cmdlets can’t apply magic to make everything work again:

Get-MgGroup -Filter "displayName eq '$Name'" Get-MgGroup_List: Unsupported or invalid query filter clause specified for property 'displayName' of resource 'Group'.

The same happens if you try to use the Get-MgTeam cmdlet from the Microsoft Graph PowerShell SDK.

Get-MgTeam -Filter "displayName eq '$Name'" Get-MgTeam_List: Unsupported or invalid query filter clause specified for property 'displayName' of resource 'Group'. Status: 400 (BadRequest) ErrorCode: BadRequest Date: 2023-10-06T04:53:39

The Workaround for Group Display Name Errors

But here’s the thing. The Get-MgGroup cmdlet (and the underlying Graph API request) work if you add the ConsistencyLevel header and an output variable to accept the count of returned items. The presence of the header makes the request into an advanced query against Entra ID.

Get-MgGroup -ConsistencyLevel Eventual -Filter "displayName eq '$Name'" -CountVariable X | Format-Table DisplayName DisplayName ----------- O365Grp-Team with an extraordinary long name that makes it much more than 120 characters so that we can have some fun …

Oddly, the Get-MgTeam cmdlet doesn’t support the ConsistencyLevel header so this workaround isn’t possible using this cmdlet. Given that Teams (the app) finds its teams through Graph requests, this inconsistency is maddening, and it’s probably due to a flaw in the metadata read by the ‘AutoREST’ process Microsoft runs regularly to generate the SDK cmdlets and build new versions of the SDK modules.

None of the Teams clients that I’ve tested have any problem displaying team names longer than 120 characters, so I suspect that the clients do the necessary magic when fetching lists of teams.

Inconsistency in Entra ID Admin Center

The developers of the Entra ID admin center must know about the 120 character limit (and not about the workaround) because they restrict group names (Figure 2).

A StackOverflow thread from 2017 reported that attempts to use the Graph to create new groups with display names longer than 120 characters resulted in errors. However, it’s possible to now use cmdlets like New-MgGroup to create groups with much longer names.

Given that the Groups Graph API allows for 256 characters, it’s yet another oddity that the Entra ID admin center focuses on a lower limit – unless the developers chose to emphasize to administrators that it’s a really bad idea to use overly long group names.

Time to Update SDK Foibles

I shall have to add this issue to my list of Microsoft Graph PowerShell SDK foibles (aka, things developers should know before they try coding PowerShell scripts using the SDK). The fortunate thing is that you’re unlikely to meet this problem in real life. At least, I hope that you are. And if you do, you’ll know what to do now.

Make sure that you’re not surprised about changes that appear inside Microsoft 365 applications or the Graph by subscribing to the Office 365 for IT Pros eBook. Our monthly updates make sure that our subscribers stay informed.

]]>Pass Permissions with the Connect-MgGraph Scopes

Now that we’re in June 2023, the need to migrate PowerShell scripts from using the old and soon-to-be-deprecated Azure AD, AzureADPreview, and Microsoft Online Services (MSOL) modules becomes intense. Microsoft is already throttling cmdlets like New-MsOlUser that perform license assignments. These cmdlets will stop working after June 30, 2023. The other cmdlets in the affected modules will continue to work but lose support after that date. Basing operational automation on unsupported modules isn’t a great strategy, which is why it’s time to replace the cmdlets with cmdlets from the Microsoft Graph PowerShell SDK or Graph API requests.

Graph Permissions

Graph permissions are an element that people often struggle with during the conversion. After you get to know how the Graph works and how Microsoft documentation is laid out, figuring out what permissions a script needs to run is straightforward.

Understanding the difference between delegated and application permissions is a further complication that can lead developers to make incorrect assumptions. Essentially, if a script uses delegated permissions, it can only access data available to the signed-in user. Application permissions are more powerful because they allow access to data across the tenant. For example, the Planner Graph API was limited to delegated permissions for about four years. Microsoft recently upgraded the API to introduce application permission support, which now means that developers can do things like report the details about every plan in an organization.

PowerShell scripts that need to process data drawn from all mailboxes, all sites, all teams, or other sets of Microsoft 365 objects should use application permissions. RBAC for applications is available to limit script access to mailboxes, but it doesn’t extend past mailboxes.

Defining Permissions for a Script with Connect-MgGraph Scopes

All of which brings me to the topic of how to define Graph permissions (scopes) in scripts that use the Microsoft Graph PowerShell SDK. Two choices exist:

- Run the Connect-MgGraph cmdlet and pass the set of required permissions with the Scopes parameter.

- Run the Connect-MgGraph cmdlet and check the permissions afterward. This approach relies on the fact that the service principal used by the Microsoft Graph PowerShell SDK accrues permissions over time, meaning that the set of accumulated permissions are likely to include the ones needed for a script to run.

I do not recommend the second option. It is preferable to be precise about the permissions needed for a script and to state those permissions when connecting to the Graph.

Examples for Connect-MgGraph Scopes

My script to report the user accounts accessing Teams shared channels in other tenants depends on the CrossTenantUserProfileSharing.Read.All permission. Thus, the script connects with this command:

Connect-MgGraph -Scopes CrossTenantUserProfileSharing.Read.All

If multiple permissions are needed, pass them in a comma-separated list.

If the service principal used by the Graph SDK doesn’t already hold the permission, the SDK prompts the user to grant access. They can grant user access or consent on behalf of the organization (which is needed to get to other users’ data).

The alternative is to check the required permissions against the set of permissions already possessed by the service principal for the Graph SDK. For example:

Connect-MgGraph

[array]$CurrentPermissions = (Get-MgContext).Scopes

[array]$RequiredPermissions = "CrossTenantUserProfileSharing.Read.All"

ForEach ($Permission in $RequiredPermissions) {

If ($Permission -notin $CurrentPermissions) {

Write-Host ("This script needs the {0} permission to run. Please have an administrator consent to the permission and try again" -f $Permission)

Break

}

}

After connecting, the first command fetches the set of current permissions. After stating the set of required permissions in an array, we loop through the set of current permissions to check that each of the required permissions are present. It’s a lot of bother and extra code, which is why I think the simplicity of stating required permissions when connecting to the Microsoft Graph PowerShell SDK is the only way to proceed. Either way works – it’s up to you to decide what you prefer.

Good luck with converting those scripts!

Support the work of the Office 365 for IT Pros team by subscribing to the Office 365 for IT Pros eBook. Your support pays for the time we need to track, analyze, and document the changing world of Microsoft 365 and Office 365.

]]>

Translating Graph API Requests to PowerShell Cmdlets Sometimes Doesn’t Go So Well

The longer you work with a technology, the more you come to know about its strengths and weaknesses. I’ve been working with the Microsoft Graph PowerShell SDK for about two years now. I like the way that the SDK makes Graph APIs more accessible to people accustomed to developing PowerShell scripts, but I hate some of the SDK’s foibles.

This article describes the Microsoft Graph PowerShell SDK idiosyncrasies that cause me most heartburn. All are things to look out for when converting scripts from the Azure AD and MSOL modules before their deprecation (speaking of which, here’s an interesting tool that might help with this work).

No Respect for $Null

Sometimes you just don’t want to write something into a property and that’s what PowerShell’s $Null variable is for. But the Microsoft Graph PowerShell SDK cmdlets don’t like it when you use $Null. For example, let’s assume you want to create a new Azure AD user account. This code creates a hash table with the properties of the new account and then runs the New-MgUser cmdlet.

$NewUserProperties = @{

GivenName = $FirstName

Surname = $LastName

DisplayName = $DisplayName

JobTitle = $JobTitle

Department = $Null

MailNickname = $NickName

Mail = $PrimarySmtpAddress

UserPrincipalName = $UPN

Country = $Country

PasswordProfile = $NewPasswordProfile

AccountEnabled = $true }

$NewGuestAccount = New-MgUser @NewUserProperties

New-MgUser fails because of an invalid value for the department property, even though $Null is a valid PowerShell value.

New-MgUser : Invalid value specified for property 'department' of resource 'User'.

At line:1 char:2

+ $NewGuestAccount = New-MgUser @NewUserProperties

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidOperation: ({ body = Micros...oftGraphUser1 }:<>f__AnonymousType64`1) [New-MgUser

_CreateExpanded], RestException`1

+ FullyQualifiedErrorId : Request_BadRequest,Microsoft.Graph.PowerShell.Cmdlets.NewMgUser_CreateExpanded

One solution is to use a variable that holds a single space. Another is to pass $Null by running the equivalent Graph request using the Invoke-MgGraphRequest cmdlet. Neither are good answers to what should not happen (and we haven’t even mentioned the inability to filter on null values).

Ignoring the Pipeline

The pipeline is a fundamental building block of PowerShell. It allows objects retrieve by a cmdlet to pass to another cmdlet for processing. But despite the usefulness of the pipeline, the SDK cmdlets don’t support it and the pipeline stops stone dead whenever an SDK cmdlet is asked to process incoming objects. For example:

Get-MgUser -Filter "userType eq 'Guest'" -All | Update-MgUser -Department "Guest Accounts" Update-MgUser : The pipeline has been stopped

Why does this happen? The cmdlet that receives objects must be able to distinguish between the different objects before it can work on them. In this instance, Get-MgUser delivers a set of guest accounts, but the Update-MgUser cmdlet does not know how to process each object because it identifies an object is through the UserId parameter whereas the inbound objects offer an identity in the Id property.

The workaround is to store the set of objects in an array and then process the objects with a ForEach loop.

Property Casing and Fetching Data

I’ve used DisplayName to refer to the display name of objects since I started to use PowerShell with Exchange Server 2007. I never had a problem with uppercasing the D and N in the property name until the Microsoft Graph PowerShell SDK came along only to find that sometimes SDK cmdlets insist on a specific form of casing for property names. Fail to comply, and you don’t get your data.

What’s irritating is that the restriction is inconsistent. For instance, both these commands work:

Get-MgGroup -Filter "DisplayName eq 'Ultra Fans'" Get-MgGroup -Filter "displayName eq 'Ultra Fans'"

But let’s say that I want to find the group members with the Get-MgGroupMember cmdlet:

[array]$GroupMembers = Get-MgGroupMember -GroupId (Get-MgGroup -Filter "DisplayName eq 'Ultra Fans'" | Select-Object -ExpandProperty Id)

This works, but I end up with a set of identifiers pointing to individual group members. Then I remember from experience gained from building scripts to report group membership that Get-MgGroupMember (like other cmdlets dealing with membership like Get-MgAdministrationUnitMember) returns a property called AdditionalProperties holding extra information about members. So I try:

$GroupMembers.AdditionalProperties.DisplayName

Nope! But if I change the formatting to displayName, I get the member names:

$GroupMembers.AdditionalProperties.displayName Tony Redmond Kim Akers James Ryan Ben James John C. Adams Chris Bishop

Talk about frustrating confusion! It’s not just display names. Reference to any property in AdditionalProperties must use the same casing as used the output, like userPrincipalName and assignedLicenses.

Another example is when looking for sign-in logs. This command works because the format of the user principal name is the same way as stored in the sign-in log data:

[array]$Logs = Get-MgAuditLogSignIn -Filter "UserPrincipalName eq 'james.ryan@office365itpros.com'" -All

Uppercasing part of the user principal name causes the command to return zero hits:

[array]$Logs = Get-MgAuditLogSignIn -Filter "UserPrincipalName eq 'James.Ryan@office365itpros.com'" -All

Two SDK foibles are on show here. First, the way that cmdlets return sets of identifiers and stuff information into AdditionalProperties (something often overlooked by developers who don’t expect this to be the case). Second, the inconsistent insistence by cmdlets on exact matching for property casing.

I’m told that this is all due to the way Graph APIs work. My response is that it’s not beyond the ability of software engineering to hide complexities from end users by ironing out these kinds of issues.

GUIDs and User Principal Names

Object identification for Graph requests depends on globally unique identifiers (GUIDs). Everything has a GUID. Both Graph requests and SDK cmdlets use GUIDs to find information. But some SDK cmdlets can pass user principal names instead of GUIDs when looking for user accounts. For instance, this works:

Get-MgUser -UserId Tony.Redmond@office365itpros.com

Unless you want to include the latest sign-in activity date for the account.

Get-MgUser -UserId Tony.Redmond@office365itpros.com -Property signInActivity

Get-MgUser :

{"@odata.context":"http://reportingservice.activedirectory.windowsazure.com/$metadata#Edm.String","value":"Get By Key

only supports UserId and the key has to be a valid Guid"}

The reason is that the sign-in data comes from a different source which requires a GUID to lookup the sign-in activity for the account, so we must pass the object identifier for the account for the command to work:

Get-MgUser -UserId "eff4cd58-1bb8-4899-94de-795f656b4a18" -Property signInActivity

It’s safer to use GUIDs everywhere. Don’t depend on user principal names because a cmdlet might object – and user principal names can change.

No Fix for Problems in V2 of the Microsoft Graph PowerShell SDK

V2.0 of the Microsoft Graph PowerShell SDK is now in preview. The good news is that V2.0 delivers some nice advances. The bad news is that it does nothing to cure the weaknesses outlined here. I’ve expressed a strong opinion that Microsoft should fix the fundamental problems in the SDK before doing anything else.

I’m told that the root cause of many of the issues is the AutoRest process Microsoft uses to generate the Microsoft Graph PowerShell SDK cmdlets from Graph API metadata. It looks like we’re stuck between a rock and a hard place. We benefit enormously by having the SDK cmdlets but the process that makes the cmdlets available introduces its own issues. Let’s hope that Microsoft gets to fix (or replace) AutoRest and deliver an SDK that’s better aligned with PowerShell standards before our remaining hair falls out due to the frustration of dealing with unpredictable cmdlet behavior.

Insight like this doesn’t come easily. You’ve got to know the technology and understand how to look behind the scenes. Benefit from the knowledge and experience of the Office 365 for IT Pros team by subscribing to the best eBook covering Office 365 and the wider Microsoft 365 ecosystem.

]]>Know What Operating System Used by Entra ID Registered Devices

After reading an article about populating extension attributes for registered devices, a reader asked me how easy it would be to create a report about the operating systems used for registered devices. Microsoft puts a lot of effort into encouraging customers to upgrade to Windows 11 and it’s a good idea to know what’s the device inventory. Of course, products like Intune have the ability to report this kind of information, but it’s more fun (and often more flexible) when you can extract the information yourself.

As it turns out, reporting the operating systems used by registered devices is very easy because the Microsoft Graph reports this information in the set of properties retrieved by the Get-MgDevice cmdlet from the Microsoft Graph PowerShell SDK.

PowerShell Script to Report Entra ID Registered Devices

The script described below creates a report of all registered devices and sorts the output by the last sign in date. Microsoft calls this property ApproximateLastSignInDateTime. As the name indicates, the property stores the approximate date for the last sign in. Entra ID doesn’t update the property every time someone uses the device to connect. I don’t have a good rule for when property updates occur. It’s enough (and approximate) that the date is somewhat accurate for the purpose of identifying if a device is in use, which is why the script sorts devices by that date.

Any Windows device that hasn’t been used to sign into Entra ID in the last six months is likely not active. This isn’t true for mobile phones because they seem to sign in once and never appear again. The report generated for my tenant still has a record for a Windows Phone which last signed in on 2 December 2015. I think I can conclude that it’s safe to remove this device from my inventory.

Figuring Out Device Owners

In the last script I wrote using the Get-MgDevice cmdlet, I figured out the owner of the device by extracting the user identifier from the PhysicalIds property. While this approach works, it’s complicated. A much better approach is to use the Get-MgDeviceRegisteredOwner cmdlet which returns the user identifier for the user account of the registered owner. With this identifier, we can retrieve any account property that makes sense, such as the display name, user principal name, department, city, and country. You could easily add other properties that make sense to your organization. See this article for more information about using the Get-MgUser cmdlet to interact with user accounts.

The Big Caveat About Operating System Information

The problem that exists in using registered devices to report operating system information is that it’s not accurate. The operating system details noted for a device are accurate at the point of registration but degrade over time. If you want to generate accurate reports, you need to use the Microsoft Graph API for Intune.

With that caveat in mind, here’s the code to report the operating system information for Entra ID registered devices:

Connect-MgGraph -Scope User.Read.All, Directory.Read.All

Write-Host "Finding registered devices"

[array]$Devices = Get-MgDevice -All -PageSize 999

If (!($Devices)) { Write-Host "No registered devices found - exiting" ; break }

Write-Host ("Processing details for {0} devices" -f $Devices.count)

$Report = [System.Collections.Generic.List[Object]]::new()

$i = 0

ForEach ($Device in $Devices) {

$i++

Write-Host ("Reporting device {0} ({1}/{2})" -f $Device.DisplayName, $i, $Devices.count)

$DeviceOwner = $Null

Try {

[array]$OwnerIds = Get-MgDeviceRegisteredOwner -DeviceId $Device.Id

$DeviceOwner = Get-MgUser -UserId $OwnerIds[0].Id -Property Id, displayName, Department, OfficeLocation, City, Country, UserPrincipalName}

} Catch {}

$ReportLine = [PSCustomObject][Ordered]@{

Device = $Device.DisplayName

Id = $Device.Id

LastSignIn = $Device.ApproximateLastSignInDateTime

Owner = $DeviceOwner.DisplayName

OwnerUPN = $DeviceOwner.UserPrincipalName

Department = $DeviceOwner.Department

Office = $DeviceOwner.OfficeLocation

City = $DeviceOwner.City

Country = $DeviceOwner.Country

"Operating System" = $Device.OperatingSystem

"O/S Version" = $Device.OperatingSystemVersion

Registered = $Device.RegistrationDateTime

"Account Enabled" = $Device.AccountEnabled

DeviceId = $Device.DeviceId

TrustType = $Device.TrustType }

$Report.Add($ReportLine)

} #End Foreach Device

# Sort in order of last signed in date

$Report = $Report | Sort-Object {$_.LastSignIn -as [datetime]} -Descending

$Report | Out-GridView

Figure 1 is an example of the report as viewed through the Out-GridView cmdlet.

You can download the latest version of the script from GitHub.

An Incomplete Help

I’ve no idea whether this script will help anyone. It’s an incomplete answer to a question. However, even an incomplete answer can be useful in the right circumstances. After all, it’s just PowerShell, so use the code as you like.

Learn how to exploit the data available to Microsoft 365 tenant administrators through the Office 365 for IT Pros eBook. We love figuring out how things work.

]]>Discover Group Membership with the Graph SDK

I’ve updated some scripts recently to remove dependencies on the Azure AD and Microsoft Online Services (MSOL) modules, which are due for deprecation on June 30, 2023 (retirement happens at the end of March for the license management cmdlets). In most cases, the natural replacement is cmdlets from the Microsoft Graph PowerShell SDK.

One example is when retrieving the groups a user account belongs to. This is an easy task when dealing with the membership of individual groups using cmdlets like:

- Get-DistributionGroupMember (fetch distribution list members).

- Get-DynamicDistributionGroupMember (fetch dynamic distribution group members).

- Get-UnifiedGroupLinks (fetch members of a Microsoft 365 group).

- Get-MgGroupMember (fetch members of an Entra ID group).

Things are a little more complex when answering a question like “find all the groups that Sean Landy belongs to.” Let’s see how we can answer the request.

The Exchange Online Approach

One method of attacking the problem often found in Exchange scripts is to use the Get-Recipient cmdlet with a filter based on the distinguished name of the mailbox belonging to an account: For example, this code reports a user’s membership of Microsoft 365 groups:

$User = Get-EXOMailbox -Identity Sean.Landy

$DN = $User.DistinguishedName

$Groups = (Get-Recipient -ResultSize Unlimited -RecipientTypeDetails GroupMailbox -Filter "Members -eq '$DN'" )

Write-Host (“User is a member of {0} groups” -f $Groups.count)

The method works if the distinguished name doesn’t include special characters like apostrophes for users with names like Linda O’Shea. In these cases, extra escaping is required to make PowerShell handle the name correctly. This problem will reduce when Microsoft switches the naming mechanism for Exchange Online objects to be based on the object identifier instead of mailbox display name. However, there’s still many objects out there with distinguished names based on display names.

The Graph API Request

As I go through scripts, I check if I can remove cmdlets from other modules to make future maintenance easier. Using Get-Recipient means that a script must connect to the Exchange Online management module, so let’s remove that need by using a Graph API request. Here’s what we can do, using the Invoke-MgGraphRequest cmdlet to run the request:

$UserId = $User.ExternalDirectoryObjectId

$Uri = ("https://graph.microsoft.com/V1.0/users/{0}/memberOf/microsoft.graph.group?`$filter=groupTypes/any(a:a eq 'unified')&`$top=200&$`orderby=displayName&`$count=true" -f $UserId)

[array]$Data = Invoke-MgGraphRequest -Uri $Uri

[array]$Groups = $Data.Value

Write-Host (“User is a member of {0} groups” -f $Groups.count)

We get the same result (always good) and the Graph request runs about twice as fast as Get-Recipient does.

Because the call is limited to Microsoft 365 groups, I don’t have to worry about transitive membership. If I did, then I’d use the group transitive memberOf API.

Using the SDK Get-MgUserMemberOf Cmdlet

The Microsoft Graph PowerShell SDK contains cmdlets based on Graph requests. The equivalent cmdlet is Get-MgUserMemberOf. This returns memberships of all group types known to Entra ID, so it includes distribution lists and security groups. To return the set of Microsoft 365 groups, apply a filter after retrieving the group information from the Graph.

[array]$Groups = Get-MgUserMemberOf -UserId $UserId -All | Where-Object {$_.AdditionalProperties["groupTypes"] -eq "Unified"}

Write-Host (“User is a member of {0} groups” -f $Groups.count)

Notice that the filter looks for a specific type of group in a value in the AdditionalProperties property of each group. If you run Get-MgUserMemberOf without any other processing. the cmdlet appears to return a simple list of group identifiers. For example:

$Groups Id DeletedDateTime -- --------------- b62b4985-bcc3-42a6-98b6-8205279a0383 64d314bb-ea0c-46de-9044-ae8a61612a6a 87b6079d-ddd4-496f-bff6-28c8d02e9f8e 82ae842d-61a6-4776-b60d-e131e2d5749c

However, the AdditionalProperties property is also available for each group. This property contains a hash table holding other group properties that can be interrogated. For instance, here’s how to find out whether the group supports private or public access:

$Groups[0].AdditionalProperties['visibility'] Private

When looking up a property in the hash table, remember to use the exact form of the key. For instance, this works to find the display name of a group:

$Groups[0].AdditionalProperties['displayName']

But this doesn’t because the uppercase D creates a value not found in the hash table:

$Groups[0].AdditionalProperties['DisplayName']

People starting with the Microsoft Graph PowerShell SDK are often confused when they see just the group identifiers apparently returned by cmdlets like Get-MgUserMemberOf, Get-MgGroup, and Get-MgGroupMember because they don’t see or grasp the importance of the AdditionalProperties property. It literally contains the additional properties for the group excepting the group identifier.

Here’s another example of using information from AdditionalProperties. The details provided for a group don’t include its owners. To fetch the owner information for a group, run the Get-MgGroupOwner cmdlet like this:

$Group = $Groups[15]

[array]$Owners = Get-MgGroupOwner -GroupId $Group.Id | Select-Object -ExpandProperty AdditionalProperties

$OwnersOutput = $Owners.displayName -join ", "

Write-Host (“The owners of the {0} group are {1}” -f $Group.AdditionalProperties[‘displayName’], $OwnersOutput)

If necessary, use the Get-MgGroupTransitiveMember cmdlet to fetch transitive memberships of groups.

The Graph SDK Should be More Intelligent

It would be nice if the Microsoft Graph PowerShell SDK didn’t hide so much valuable information in AdditionalProperties and wasn’t quite so picky about the exact format of property names. Apparently, the SDK cmdlets behave in this manner because it’s how Graph API requests work when they return sets of objects. That assertion might well be true, but it would be nice if the SDK applied some extra intelligence in the way it handles data.

Insight like this doesn’t come easily. You’ve got to know the technology and understand how to look behind the scenes. Benefit from the knowledge and experience of the Office 365 for IT Pros team by subscribing to the best eBook covering Office 365 and the wider Microsoft 365 ecosystem.

]]>Finding Azure AD Guest Accounts in Microsoft 365 Groups

The article explaining how to report old guest accounts and their membership of Microsoft 365 Groups (and teams) in a tenant is very popular and many people use its accompanying script. The idea is to find guest accounts above a certain age (365 days – configurable in the script) and report the groups these guests are members of. Any old guest accounts that aren’t in any groups are candidates for removal.

The script uses an old technique featuring the distinguished name of guest accounts to scan for group memberships using the Get-Recipient cmdlet. The approach works, but the variation of values that can exist in distinguished names due to the inclusion of characters like apostrophes and vertical lines means that some special processing is needed to make sure that lookups work. Achieving consistency in distinguished names might be one of the reasons for Microsoft’s plan to make Exchange Online mailbox identification more effective.

In any case, time moves on and code degrades. I wanted to investigate how to use the Microsoft Graph PowerShell SDK to replace Get-Recipient. The script already uses the SDK to find Azure AD guest accounts with the Get-MgUser cmdlet.

The Graph Foundation

Graph APIs provide the foundation for all SDK cmdlets. Graph APIs provide the foundation for all SDK cmdlets. The first thing to find is an appropriate API to find group membership. I started off with getMemberGroups. The PowerShell example for the API suggests that the Get-MgDirectoryObjectMemberGroup cmdlet is the one to use. For example:

$UserId = (Get-MgUser -UserId Terry.Hegarty@Office365itpros.com).id [array]$Groups = Get-MgDirectoryObjectMemberGroup -DirectoryObjectId $UserId -SecurityEnabledOnly:$False

The cmdlet works and returns a list of group identifiers that can be used to retrieve information about the groups that the user belongs to. For example:

Get-MgGroup -GroupId $Groups[0] | Format-Table DisplayName, Id, GroupTypes

DisplayName Id GroupTypes

----------- -- ----------

All Tenant Member User Accounts 05ecf033-b39a-422c-8d30-0605965e29da {DynamicMembership, Unified}

However, because Get-MgDirectoryObjectMemberGroup returns a simple list of group identifiers, the developer must do extra work to call Get-MgGroup for each group to retrieve group properties. Not only is this extra work, calling Get-MgGroup repeatedly becomes very inefficient as the number of guests and their membership in groups increase.

Looking Behind the Scenes with Graph X-Ray

The Azure AD admin center (and the Entra admin center) both list the groups that user accounts (tenant and guests) belong to. Performance is snappy and it seemed unlikely that the code used was making multiple calls to retrieve the properties for each group. Many of the sections in these admin centers use Graph API requests to fetch information, and the Graph X-Ray tool reveals those requests. Looking at the output, it’s interesting to see that the admin center uses the beta Graph endpoint with the groups memberOf API (Figure 1).

We can reuse the call used by the Azure AD center to create the query (containing the object identifier for the user account) and run the query using the SDK Invoke-MgGraphRequest cmdlet. One change made to the command is to include a filter to select only Microsoft 365 groups. If you omit the filter, the Graph returns all the groups a user belongs to, including security groups and distribution lists. The group information is in an array that’s in the Value property returned by the Graph request. For convenience, we put the data into a separate array.

$Uri = ("https://graph.microsoft.com/beta/users/{0}/memberOf/microsoft.graph.group?`$filter=groupTypes/any(a:a eq 'unified')&`$top=200&$`orderby=displayName&`$count=true" -f $Guest.Id)

[array]$Data = Invoke-MgGraphRequest -Uri $Uri

[array]$GuestGroups = $Data.Value

Using the Get-MgUserMemberOf Cmdlet

The equivalent SDK cmdlet is Get-MgUserMemberOf. To return the set of groups an account belongs to, the command is:

[array]$Data = Get-MgUserMemberOf -UserId $Guest.Id -All [array]$GuestGroups = $Data.AdditionalProperties

The format of returned data marks a big difference between the SDK cmdlet and the Graph API request. The cmdlet returns group information in a hash table in the AdditionalProperties array while the Graph API request returns a simple array called Value. To retrieve group properties from the hash table, we must enumerate through its values. For instance, to return the names of the Microsoft 365 groups in the hash table, we do something like this:

[Array]$GroupNames = $Null

ForEach ($Item in $GuestGroups.GetEnumerator() ) {

If ($Item.groupTypes -eq "unified") { $GroupNames+= $Item.displayName }

}

$GroupNames= $GroupNames -join ", "

SDK cmdlets can be inconsistent in how they return data. It’s just one of the charms of working with cmdlets that are automatically generated from code. Hopefully, Microsoft will do a better job of ironing out inconsistencies when they release V2.0 of the SDK sometime later in 2023.

A Get-MgUserTransitiveMemberOf cmdlet is also available to return the membership of nested groups. We don’t need to do this because we’re only interested in Microsoft 365 groups, which don’t support nesting. The cmdlet works in much the same way:

[array]$TransitiveData = Get-MgUserTransitiveMemberOf -UserId Kim.Akers@office365itpros.com -All

The Script Based on the SDK

Because of the extra complexity in accessing group properties, I decided to use a modified version of the Graph API request from the Azure AD admin center. It’s executed using the Invoke-MgGraphRequest cmdlet, so I think the decision is justified.

When revising the script, I made some other improvements, including adding a basic assessment of whether a guest account is stale or very stale. The assessment is intended to highlight if I should consider removing these accounts because they’re obviously not being used. Figure 2 shows the output of the report.

You can download a copy of the script from GitHub.

Cleaning up Obsolete Azure AD Guest Accounts

Reporting obsolete Azure AD guest accounts is nice. Cleaning up old junk from Azure AD is even better. The script generates a PowerShell list with details of all guests over a certain age and the groups they belong to. To generate a list of the very stale guest accounts, filter the list:

[array]$DeleteAccounts = $Report | Where-Object {$_.StaleNess -eq "Very Stale"}

To complete the job and remove the obsolete guest accounts, a simple loop to call Remove-MgUser to process each account:

ForEach ($Account in $DeleteAccounts) {

Write-Host ("Removing guest account for {0} with UPN {1}" -f $Account.Name, $Account.UPN)

Remove-MgUser -UserId $Account.Id }

Obsolete or stale guest accounts are not harmful, but their presence slows down processing like PowerShell scripts. For that reason, it’s a good idea to clean out unwanted guests periodically.

Learn about mastering the Microsoft Graph PowerShell SDK and the Microsoft 365 PowerShell modules by subscribing to the Office 365 for IT Pros eBook. Use our experience to understand what’s important and how best to protect your tenant.

]]>Pay as You Go Model for Microsoft 365 APIs

About fifteen months ago, Microsoft introduced the notion of metered APIs where those who consumed the APIs would pay for the resources they consume. The pay-as-you-go (PAYG) model evolved further in July 2022 when Microsoft started to push ISVs to use the new Teams export API instead of Exchange Web Services (EWS) for their backup products. The Teams export API is a metered API and is likely to the test case to measure customer acceptance of the PAYG model.

So far, I haven’t heard many positive reactions to the development. Some wonder how Microsoft can force ISVs to use an API when they don’t know how high the charges metering will rack up. Others ask how Microsoft can introduce an export API for backup when they don’t have an equivalent import API to allow tenants to restore data to Teams. I don’t understand this either as it seems logical to introduce export and import capabilities at the same time. We live in interesting times!

PAYG with Syntex Backup

To be fair to Microsoft, they plan to go down the same PAYG route with the new backup service they plan to introduce in 2023 as part of the Syntex content management suite. Customers will have to use an Azure subscription to pay for backups of SharePoint Online, OneDrive for Business, and Exchange Online (so far, Microsoft is leaving Teams backup to ISVs).

All of which brings me to the December 2 post from the Microsoft Graph development team where Microsoft attempts to describe what they’re doing with different Microsoft 365 APIs. Like many Microsoft texts, too many words disguise the essential facts of the matter.

Three Microsoft 365 API Tiers

Essentially, Microsoft plans to operate three Microsoft 365 API tiers:

- Standard: The regular Graph-based and other APIs that allow Microsoft 365 tenants to access and work with their data.

- High-capacity: Metered APIs that deal with high-volume operations like the streaming of data out of Microsoft 365 for backups or the import of data into Microsoft 365.

- Advanced: APIs developed by Microsoft to deliver new functionality. Microsoft points to Azure Communications Services as an example. These APIs allow developers to add the kind of communication options that are available in Teams to their applications.

My reading of the situation is that Microsoft won’t charge for standard APIs because this would interfere with customer access to their data. Microsoft says that standard APIs will remain the default endpoint.

However, Microsoft very much wants to charge for high-capacity APIs used by “business-critical applications with high usage patterns.” The logic here is that these APIs strain the resources available within the service. To ensure that Microsoft can meet customer expectations, they need to deploy more resources to meet the demand and someone’s got to pay for those resources. By using a PAYG model, Microsoft will charge for actual usage of resources.

Microsoft also wants customers to pay for advanced APIs. In effect, this is like an add-on license such as Teams Premium. If you want to use the bells and whistles enabled by an advanced API, you must pay for the privilege. It’s a reasonable stance.

Problem Areas for Microsoft 365 APIs

I don’t have a problem with applying a tiered model for APIs, especially if the default tier continues with free access. The first problem here is in communications, where Microsoft has failed to sell their approach to ISVs and tenants. The lack of clarity and obfuscation is staggering for an organization that employs masses of marketing and PR staff.

The second issue is the lack of data about how much PAYG is likely to cost. Few want to write an open-ended check to Microsoft for API usage. Microsoft is developing the model and understands how the APIs work, so it should be able to give indicative pricing for different scenarios. For instance, if I have 100 teams generating 35,000 new channel conversations and 70,000 chats monthly, how much will a backup cost? Or if my tenant generates new and updated documents at the typical rate observed by Microsoft across all tenants of a certain size, how much will a Syntex backup cost?

The last issue is the heavy-handed approach Microsoft has taken with backup ISVs. Being told that you must move from a working, well-sorted, and totally understood API to a new, untested, and metered API is not a recipe for good ISV relationships. Microsoft needs its ISVs to support its API tiered model. It would be so much better if a little less arrogance and a little more humility was obvious in communication. Just because you’re the big dog who owns the API bone doesn’t mean that you need to fight with anyone who wants a lick.

Make sure that you’re not surprised about changes that appear inside Office 365 applications by subscribing to the Office 365 for IT Pros eBook. Our monthly updates make sure that our subscribers stay informed.

]]>Understanding What the Graph Usage Reports API Generates

Last month, I discussed a new version of the Microsoft 365 user activity report based on the 180-day lookback period now supported by the Graph usage reports API. This provoked some questions about the API that are worth clarifying.

Single Point of Reference for Reports

Microsoft 365 administrative interfaces (like the Teams admin center) generate their usage reports from the data retrieved via the API. The API is a single point of contact for any usage report found inside Microsoft 365. This used not to be the case, but it is now. The Teams admin center currently limits reports to the previous 90 days while the Microsoft 365 admin center supports 180 days.

Concealing Display Names

Because the API is used everywhere, the setting to conceal user, group, and site display names available in the Reports section of Org Settings in the Microsoft 365 admin center controls all data retrieved with the API, including any reports that you create. If you want to see display names in usage data, the setting to obfuscate this information must be turned off (Figure 1).

Teams was the last workload to apply concealment to usage data. All workloads that generate usage data now support this feature.

Switching Concealment On and Off

In a script, you can check if concealment is active and disable it temporarily to allow display names to be fetched using the Graph Usage Reports API. For example:

# first, find if the data is obscured

$Display = Invoke-MgGraphRequest -Method Get -Uri 'https://graph.microsoft.com/beta/admin/reportSettings'

If ($Display['displayConcealedNames'] -eq $True) { # data is obscured, so let's reset it to allow the report to run

$ObscureFlag = $True

Write-Host "Setting tenant data concealment for reports to False" -foregroundcolor red

Invoke-MgGraphRequest -Method PATCH -Uri 'https://graph.microsoft.com/beta/admin/reportSettings' -Body (@{"displayConcealedNames"= $false} | ConvertTo-Json) }

To reverse the process, update the setting to True:

# And reset obscured data if necessary

If ($ObscureFlag -eq $True) {

Write-Host "Resetting tenant data concealment for reports to True" -foregroundcolor red

Invoke-MgGraphRequest -Method PATCH -Uri 'https://graph.microsoft.com/beta/admin/reportSettings' -Body (@{"displayConcealedNames"= $true} | ConvertTo-Json) }

Teams Data Needs Interpretation

If you look at the information returned by the Graph Usage Reports API for Teams usage, the activity level reported for teams needs some interpretation. For instance, here’s some information I extracted for the team we use to manage the Office 365 for IT Pros eBook. I used a 180-day lookback to extract the data with this script.

Name : Ultimate Guide to Office 365 LastActivity : 2022-09-06 AccessType : Private Id : 33b07753-efc6-47f5-90b5-13bef01e25a6 IsDeleted : False ActiveUsers : 17 ActiveExtUsers : 7 Guests : 8 ActiveChannels : 11 SharedChannels : 1 Posts : 51 Replies : 511 Channelmessages : 674 Reactions : 241 Mentions : 185 UrgentMessages : 0 Meetings : 0

The report data shows that:

- The last activity noted for the team was September 6, 2022. The usage report data is always a couple of days behind.

- The team has private membership and is not deleted. Usage data includes deleted teams that have logged some activity within the lookback period.

- The number of active users is 17. However, the team has 2 owners and 9 members, so this figure is odd.

- The number of active external users is 7. This matches the 7 guest members in the team.

- The number of guest accounts is 8. Currently, the team has only 7 guests, but a team owner or administrator could have removed a guest account during the lookback period. The shortest lookback period is 7 days. When I use this to query the usage data, it reports 7 guests.

- The number of active channels is 11. There are currently 10 channels in the team plus one deleted channel. Deleted channels remain available for restore for a 21-day period. In this case, the deleted channel is a shared channel that I deleted many months ago, so that’s worth investigating. The Graph API for channels doesn’t list deleted channels, so I can’t check its deletion status or deletion date. What’s also weird is that no audit records exist for the deletion of this channel…

- There is one other shared channel in the team.

Message Counts are Even More Confusing

The data reported for message volume within a team is where things get interesting. The numbers of reactions and mentions are easy to understand (and you can validate the reactions number through audit records). Things are less clear with posts, replies, and channel messages. The Microsoft 365 admin center avoids confusion by only reporting the count of channel messages (674). The Teams admin center reports posts (51), replies (511), and channel messages.

According to Microsoft documentation, “Channel messages is the number of unique messages that the user posted in a team channel during the specified time period.” I think the reference to “the user” should be “users” as this makes more sense. However, adding posts and replies only gets me to 562, which is 122 less than the channel message count. Reactions could be considered as a form of reply, but 241 reactions is more than the 122 gap, so there’s a mystery as to how Microsoft calculates the number of channel messages.

The counted messages include only those posted by users. They don’t include messages posted by applications or those that come through the inbound webhook connector.

The Groups and Teams activity report script reads the usage data for a team to know if it is active or potentially obsolete. In that instance, a precise measurement of message activity isn’t a real problem because we accept that if a team has some messaging activity it’s not obsolete. However, if you’re interested in tracking the exact number of messages generated per team, it’s best to use the total of posts and replies.

Seeking Understanding from the Graph Usage Reports API

The old rule applies of not accepting data until you understand its meaning. It’s nice that Teams usage data is available for tenants to browse and download. It would be even nicer if the meaning of the data was clearer.

Learn how to exploit the data available to Microsoft 365 tenant administrators through the Office 365 for IT Pros eBook. We love figuring out how things work.

]]>Introducing the Tenant Admin Namespace for SharePoint Graph Settings

Despite being the two basic Microsoft 365 workloads, one of the notable gaps in Microsoft Graph API coverage has been administrative interfaces for SharePoint Online and Exchange Online. A small but valuable step in the right direction happened with the appearance of the settings resource type in the TenantAdmin namespace. For now, the coverage for tenant settings is sparse and only deals with some of the settings that administrators can manage using the Set-SPOTenant PowerShell cmdlet, but it’s a start, and you can see how Microsoft might develop the namespace to handle programmatic access to settings that currently can only be managed through an admin portal.

Options to Manage SharePoint Online Settings

SharePoint Online tenant-wide settings apply to SharePoint Online sites and OneDrive for Business accounts. Like all Graph APIs, apps must have permissions to be able to make requests. The read-only permission is SharePointTenantSettings.Read.All while you’ll need the SharePointTenantSettings.ReadWrite.All permission to update settings.

Three methods are available to use the new API:

- The Graph Explorer.

- A dedicated app registered in Entra ID

- The Microsoft Graph PowerShell SDK.